- Dịch vụ khác (7)

- Wordpress (33293)

- Plugin (8228)

- Apps Mobile (364)

- Codecanyon (4157)

- PHP Scripts (763)

- Plugin khác (2537)

- Whmcs (67)

- Wordpress.org (340)

- Theme (25065)

- Blogger (2)

- CMS Templates (545)

- Drupal (3)

- Ghost Themes (3)

- Joomla (19)

- Magento (2)

- Muse Templates (37)

- OpenCart (19)

- PrestaShop (9)

- Shopify (1142)

- Template Kits (6279)

- Theme khác (379)

- Themeforest (7443)

- Tumblr (1)

- Unbounce (3)

- Website & Html Templates (9179)

- Admin Templates (832)

- Email Templates (228)

- Site Templates (7636)

- Plugin (8228)

AI Chat GPT OpenAI HTML 5 – GPT 4o and GPT4 mini ready

1.245.090₫ Giá gốc là: 1.245.090₫.249.018₫Giá hiện tại là: 249.018₫.

Chat GPT-3 OpenAI HTML 5 Version

See it working: https://smartanimals.polartemplates.com/v1/

This is a model made in HTML 5.

Among the capabilities of this template are the creation of intelligent neural responses, continuation of dialogues, code generation, response to texts, and much more.

In the template, you can communicate with ten intelligent animals, each of which has been trained by AI to understand a specific subject. Additionally, you can create your own intelligent animal by customizing it to understand a specific subject by modifying a JSON file.

- Customize Easy

- Clear Documentation

- Responsive (mobile compatible)

- Including html, css and javascript files

- Add, delete and modify new animals by json file

- Assets PSD not included.

What you Get

- Documentation

- Html, css, PHP and javascript files

Notes

- You can test on a local server or you can host the html files on your preferred hosting.

- No database is used.

- The chat model used is InstructGPT.

- Not available in the Fine-tuning models template

- Current model: GPT 3.5 Turbo, GPT-3.5-turbo-16k, GPT-4, GPT-4-32k, text-davinci-003

- This is not a SaaS product.

Requirements

- It is necessary that you have a GPT chat API key, which can be obtained in openai website.

- It is recommended to use a version of PHP between 7.4.9 and 8.2.1.

- Your website must have active SSL

Test the TextStream on your server.

https://polartemplates.com/text-stream/

Changelog

==========06/14/2023========== - Added new models: - gpt-3.5-turbo-16k: Same capabilities as the standard gpt-3.5-turbo model but with 4 times the context. (16,384 tokens) - gpt-4: More capable than any GPT-3.5 model, able to do more complex tasks (8,192 tokens) - gpt-4-32k: Same capabilities as the base gpt-4 mode but with 4x the context length. Will be updated with our latest model iteration. (32,768 tokens) Please note: To use GPT4, you need a specific key for it. Currently, the GPT3 key is not compatible. Obtaining a GPT4 key requires approval through a whitelist signup process. You can sign up for it through the provided link. https://openai.com/waitlist/gpt-4-api ==========03/29/2023========== - Individual chats with animals can now be opened through a URL (Chatting directly with the animal without the need to select from the list). - A possibility to add a privacy policy text in the site's footer (Displayed in a modal). - Pre-configuration in the app.js file to receive a future update for the GPT4 chat. Please note that GPT4 will only be made available once it is out of beta. - It is now possible to individually set the "Chat GPT Turbo or Text davinci 03" model for each animal. ---------------------------------------------------------- ==========03/20/2023========== - Added 11 new intelligent animals. - Added button to copy text from chat. - Added button to cancel message sending. - Added text stream feature. This functionality is not compatible with all servers, being supported only on servers that do not have cache, buffer or GZIP compression installed. - Download chat history. - Clear chat history - Save chat history when refreshing the page. - Shuffle characters - Added +2 characters - GPT 3.5 Turbo: The project now includes the GPT 3.5 Turbo model, the same one used in the original gpt chat. This model is 10x cheaper than text-davinci3. - API DALL•E 2: You can now generate images directly in the chat using the /img command [type something to generate]. - Updated project structure: Characters, settings, and languages are now in separate files. - HTML Injection: A treatment has been implemented to prevent HTML code injection. - Improved design of download and chat clearing buttons. - Message send date and time are now displayed. - Toasty and sweet alert libraries added for alert features. - Fixed inappropriate word filter: Filtering is now done when sending the message. - Temperature and penalty can be set individually for each character. - Documentation has been rewritten with more details. - Enhanced security: The option to use the API key on the front-end has been removed. Now, the key can only be executed on the server through a PHP file to ensure data security. - The options "chat_minlength", "chat_maxlength", and "max_num_chats_api" will be managed individually by each character in the character.json file - New AI character Chroma - At the request of some clients, we have made the old chat version available.

How the API works

The template is directly linked to the Chat GPT-3 API. Each request made to the API by the chat consumes tokens, also called credits. The number of tokens and credits consumed varies depending on the size and type of the request, as well as the GPT-3 model used.

Users can purchase additional tokens and credits as needed and monitor usage through their OpenAI account platform at https://platform.openai.com/account/usage

The cost of tokens and credits varies depending on the size of the question or answer processed by the API. If all tokens and credits are consumed, the user will not be able to make any more API requests until more are purchased.

Users can check the price of each token and model via the following link:

https://openai.com/pricing

FAQ

Is the product built on WordPress?

No, our product is developed entirely using HTML5, CSS3, PHP (for the API), and JavaScript. However, it can be integrated into a WordPress page if necessary.

Is this a SAAS product?

No, our goal with this product is to provide an HTML5 widget with integration to the Chat GPT API. The product does not have an administrative system, our goal is to make it easy for the user to purchase our template and easily implement our model into their systems according to their needs. We have plans to launch a SAAS model in the future with a CMS panel.

Do I need an OpenAI key to use the product?

Yes, it is mandatory to have an OpenAI key to use the model.

How is AI updated?

AI updates are made through a JSON file. It is possible to add, delete, or remove new AIs and also train them with custom prompts.

Why isn’t my text streaming function working?

In order for the streaming function to work correctly, it’s important that the server doesn’t have gzip enabled or other extensions that compress cache on the browser’s output buffer. Otherwise, the text will be displayed all at once instead of being displayed in chunks, which is the standard behavior of the text streaming function.

It’s worth noting that this streaming function is 100% optional, and the product will work normally without it.

We can recommend a server that is 100% compatible with this function. If you have any questions, please feel free to contact us. Thank you. Test the TextStream on your server.

https://polartemplates.com/text-stream/

Tặng 1 theme/plugin đối với đơn hàng trên 140k:

Flatsome, Elementor Pro, Yoast seo premium, Rank Math Pro, wp rocket, jnews, Newspaper, avada, WoodMart, xstore

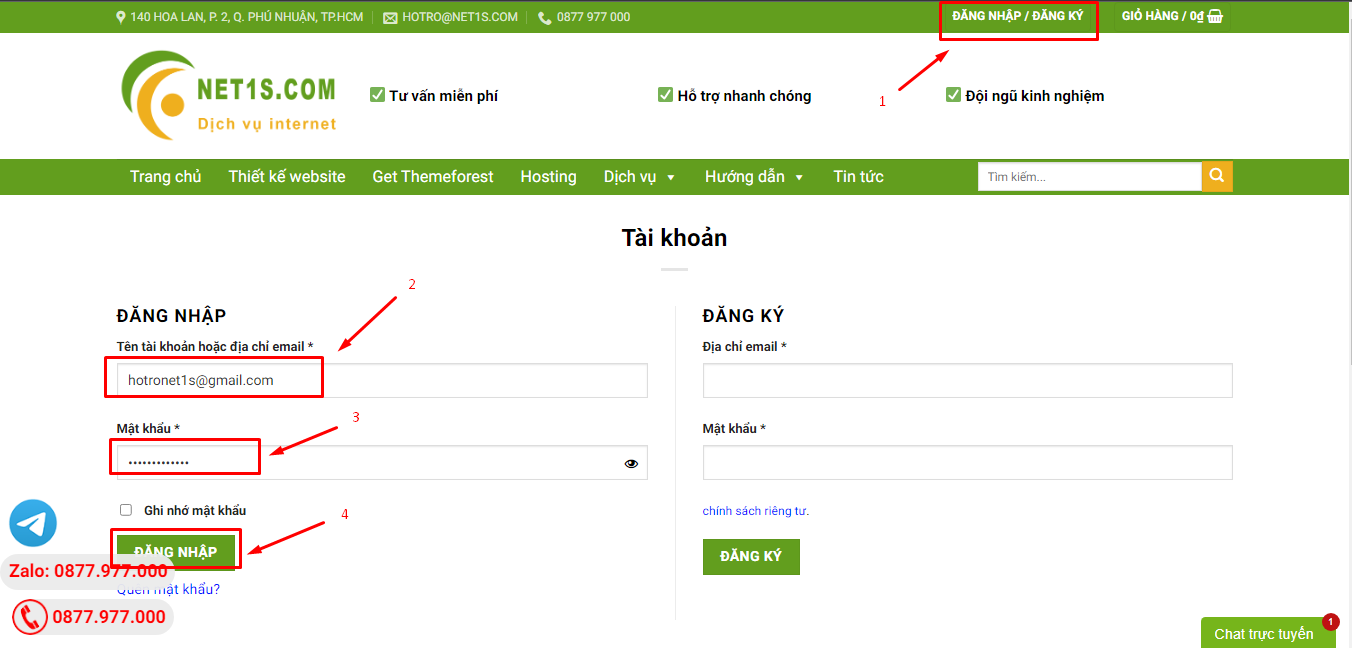

1. Bấm Đăng nhập/đăng ký.

2. Điền thông tin email, mật khẩu đã mua hàng -> bấm Đăng nhập.

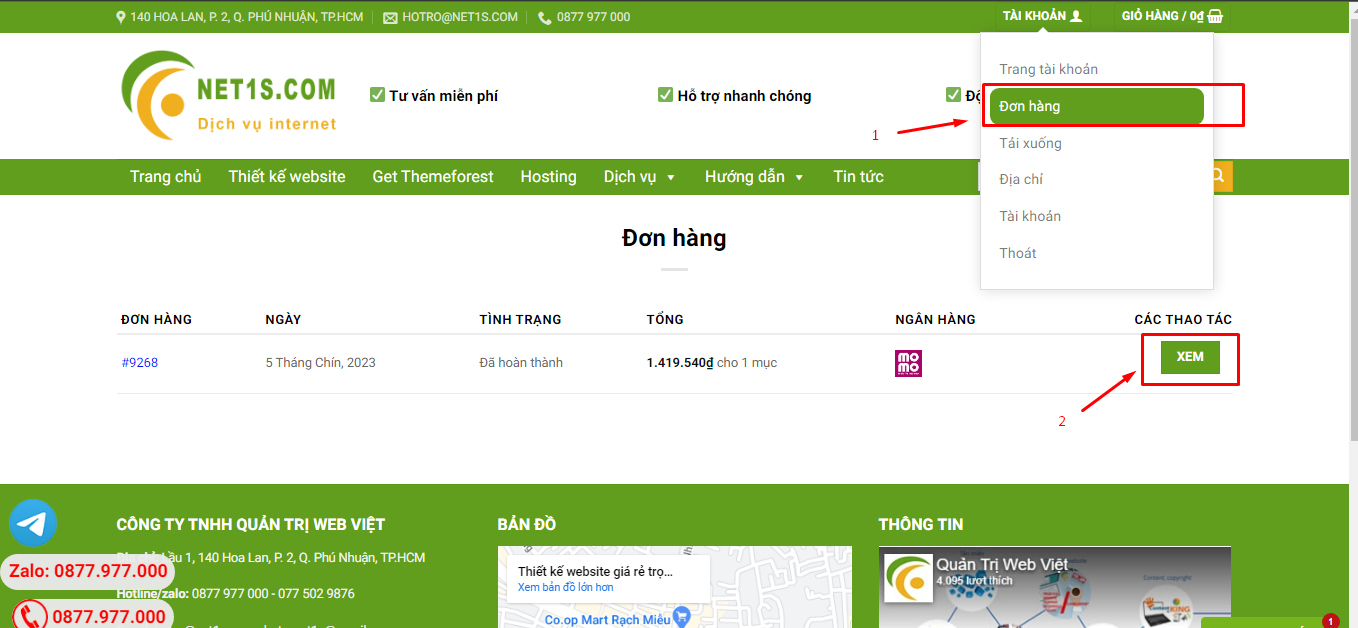

3. Di chuột đến mục Tài khoản -> Đơn hàng -> bấm vào Xem ở đơn hàng đã mua.

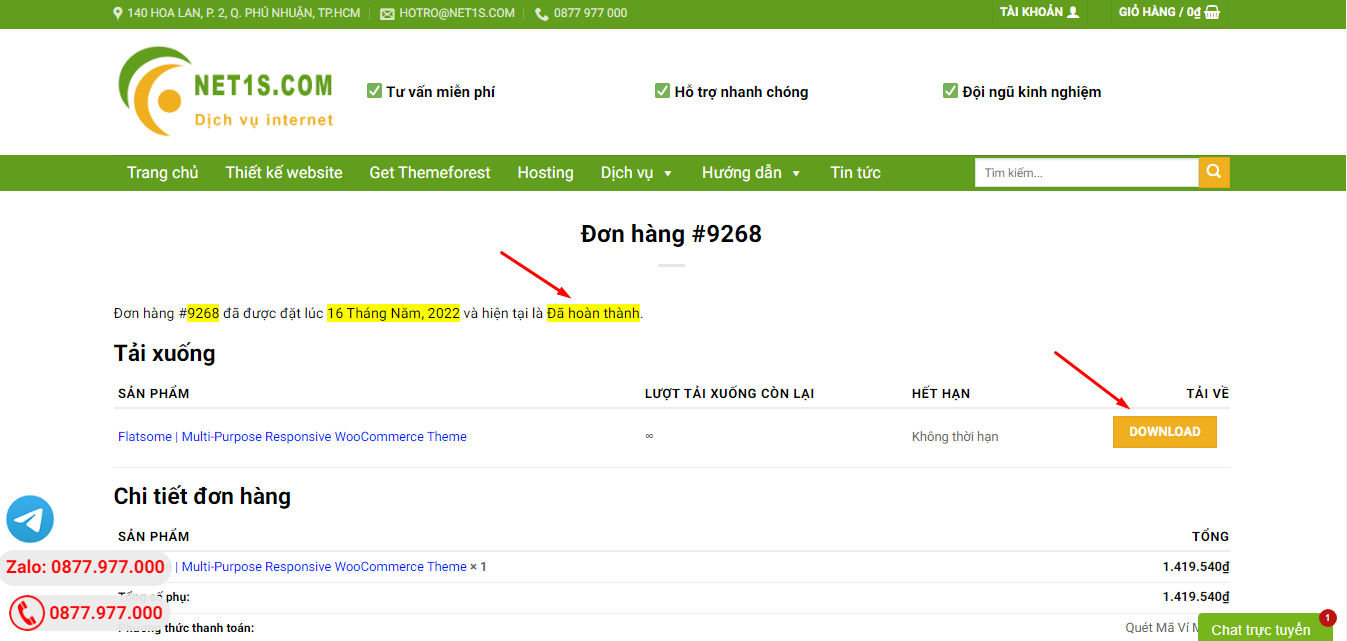

4. Đơn hàng hiển thị tình trạng Đã hoàn thành -> bấm vào Download để tải sản phẩm về.

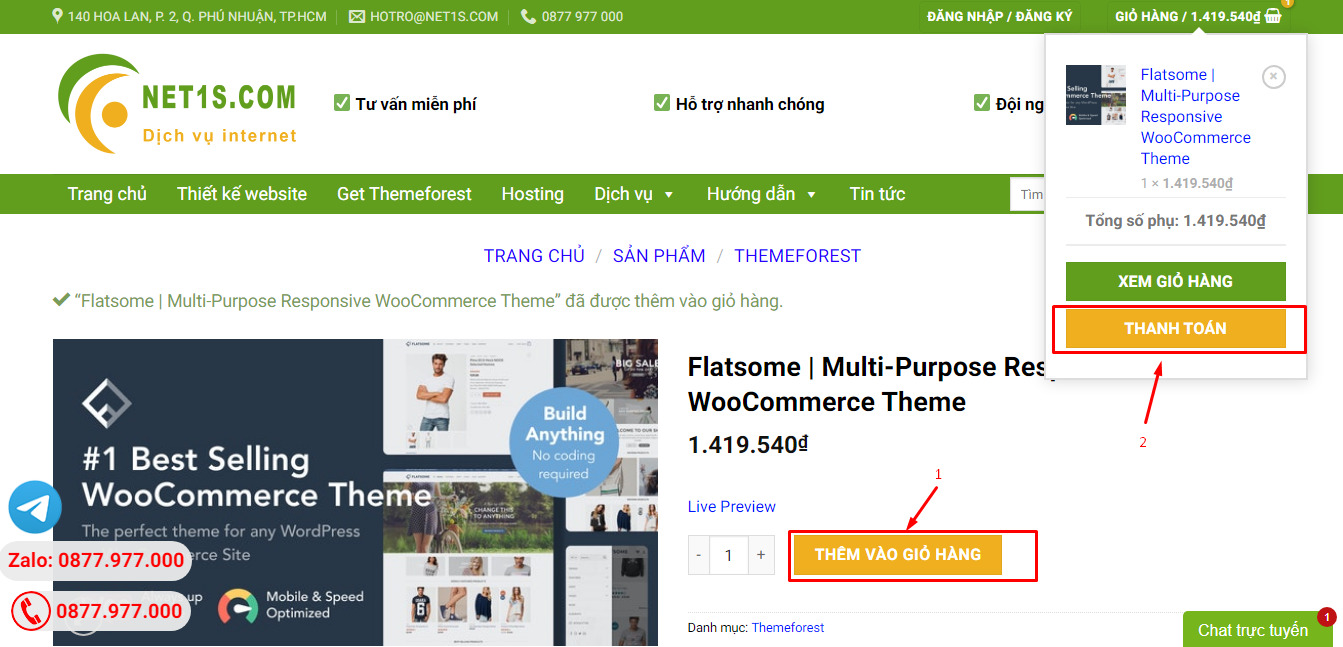

1. Bấm Thêm vào giỏ hàng -> bảng thông tin giỏ hàng sẽ hiển thị góc trên bên phải.

2. Bấm Thanh toán.

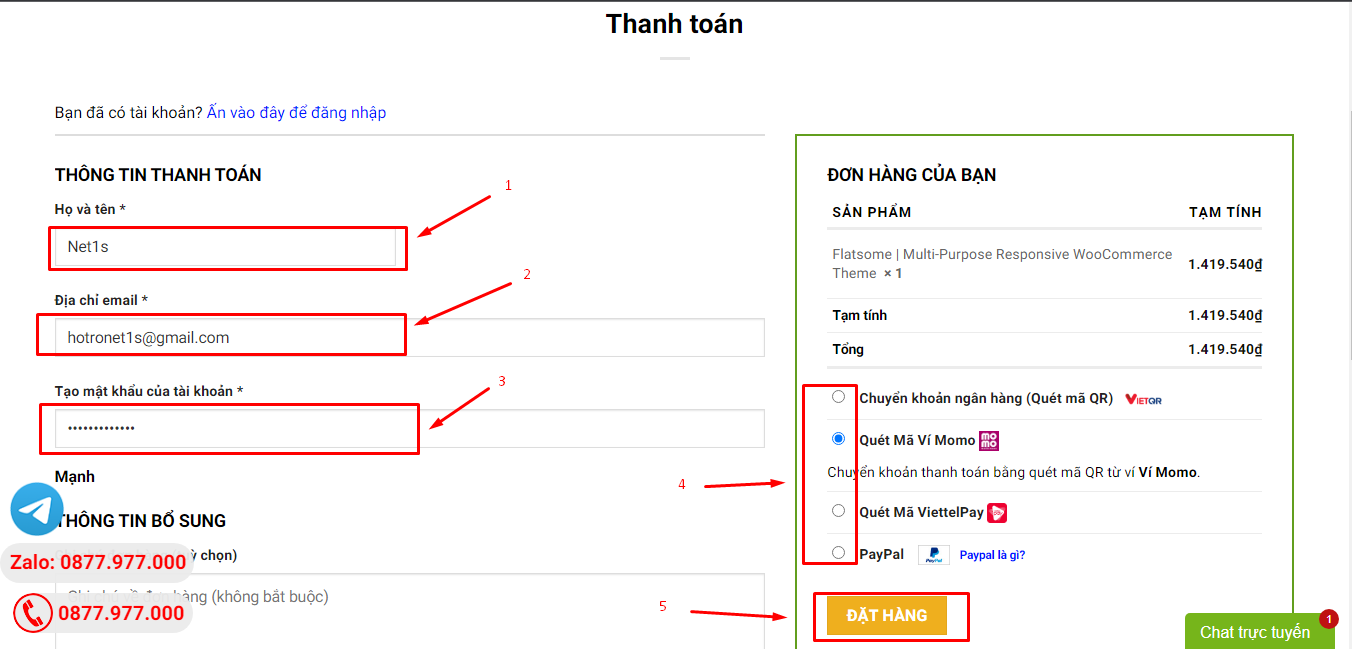

3. Điền thông tin thanh toán gồm: tên, email, mật khẩu.

4. Chọn phương thức thanh toán có hỗ trợ gồm: Chuyển khoản ngân hàng (quét mã QR), quét mã Momo, quét mã Viettelpay, Paypal.

5. Bấm Đặt hàng để tiếp tục.

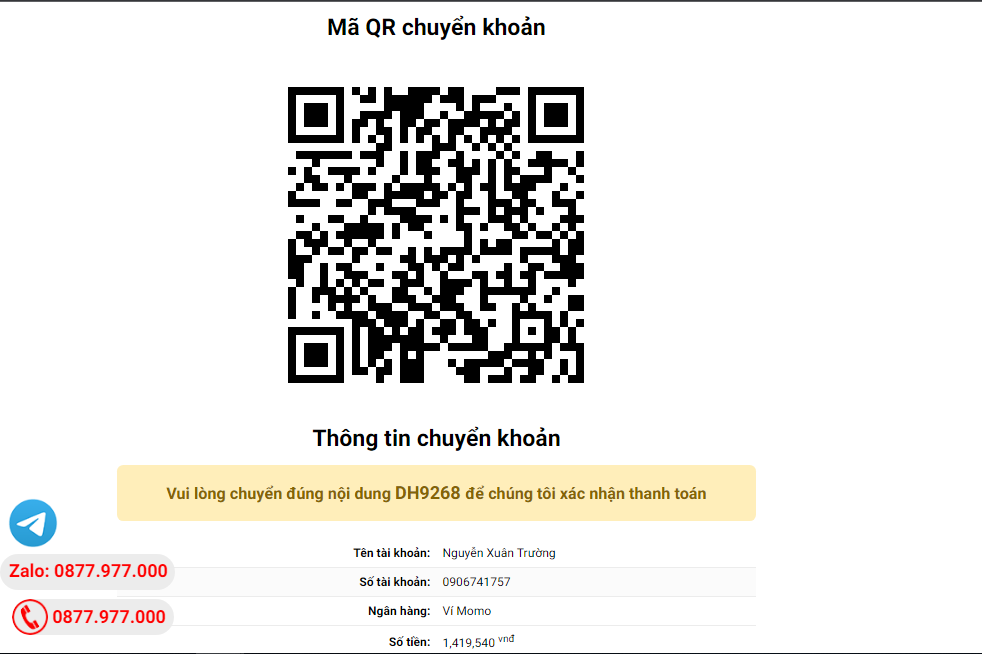

5. Thanh toán bằng cách quét mã QR (Nội dung chuyển khoản và số tiền sẽ tự động được tạo), hoặc chuyển khoản nhập số tiền và nội dung chuyển khoản như hướng dẫn.

6. Sau khi thanh toán xong đơn hàng sẽ được chúng tôi xác nhận đã hoàn thành và bạn có thể vào mục Đơn hàng để tải sản phẩm đã mua về.